Canon shows their impressive new Deep learning image processing technology

Canon has revealed their new Deep learning image processing technology. At the moment it’s not clear when Canon will open this tech to their customers and if it also will be implemented directly into their cameras. But certainly this is impressive stuff and I hope it will be available soon for every photographer.

Here is the google translated text from their press release:

One moment, one place, the spectacle will never be visited again. But it can be recorded with a camera. A camera captures wonderful moments, such as a spectacular view that you have never seen before, or a moving moment that brings back vivid memories when you look back at it later.

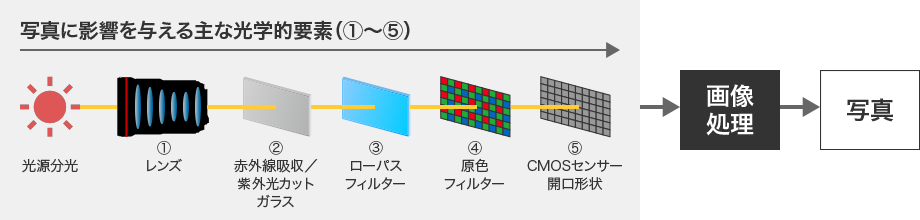

However, there were some unavoidable problems with the image quality of the photos. For example, noise that makes a photo look grainy, moiré that makes a moire pattern that should not exist, and blurring of an image caused by the principle of a lens. There were times when information was reflected. When a wide-angle lens is used, the image quality in the peripheral areas off the center of the lens tends to be blurred due to the deterioration of the optical performance of the lens.

As AI technology advances and deep learning *1 technology is being applied in a variety of ways, Canon, as a leading company with extensive knowledge of cameras and lenses, is committed to the inevitability of the principle of photography. In order to solve this problem, he directly tackled the development of deep learning image processing technology himself.

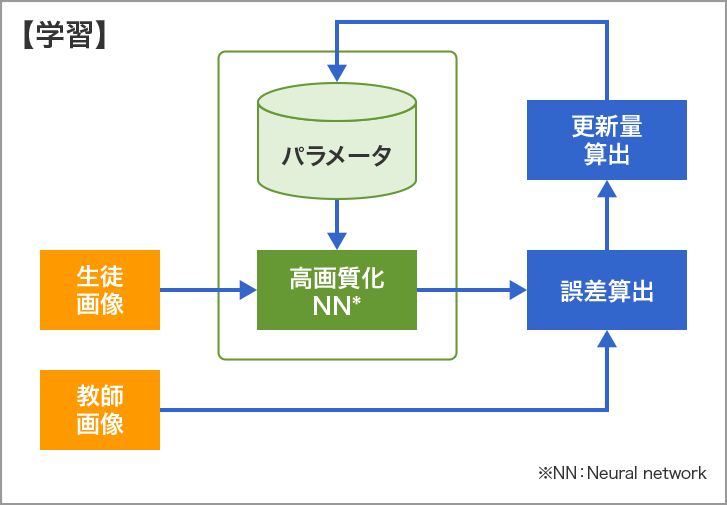

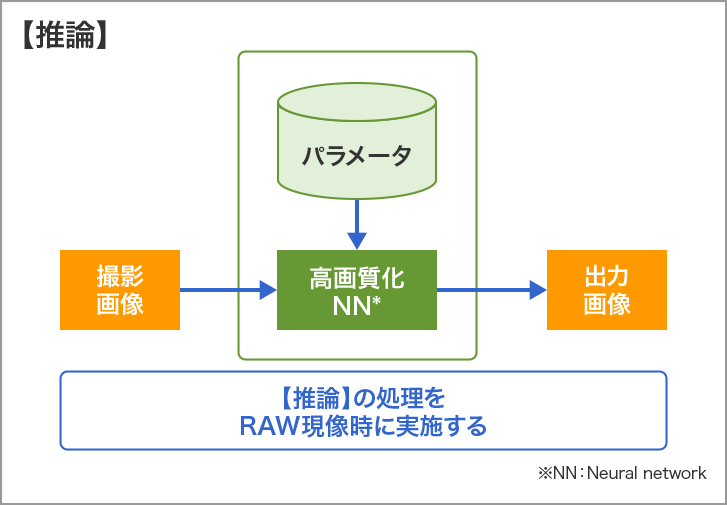

- *1 Deep learning: Using a “neural network,” a mathematical model that mimics the neural circuits of the human brain, to make a computer learn from a large amount of data, and the computer itself learns and derives features from the data to make desirable inferences and judgments. A method that allows you to obtain results such as

Securing a large amount of learning data that is important for deep learning technology

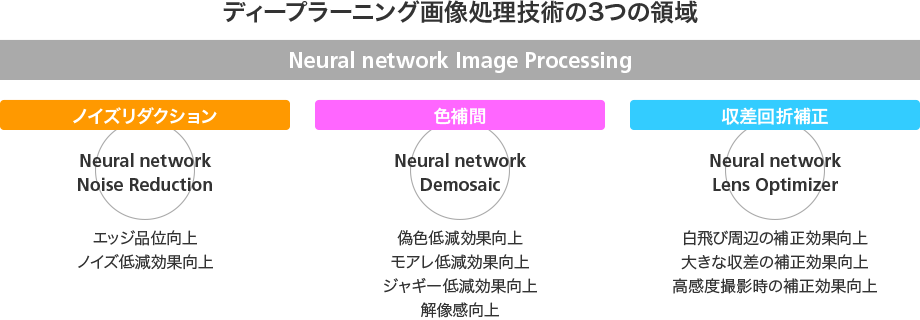

Canon’s deep-learning image processing technology, aimed at achieving true-to-life photography, covers three areas: noise reduction, color interpolation, and aberration diffraction correction (lens blur correction). Both areas have so far been regarded as problems in principle.

In the first place, the key to achieving high accuracy in the results of deep learning image processing technology is how many pairs of “teacher images” and “student images” (=learning data) can be prepared. Canon has a huge image database that has been accumulated through the development of cameras and lenses so far, covering all imaginable subjects, and storing RAW data with more detailed information such as JPEG. increase. In addition, we were able to collect a large amount of ideal training data using the camera manufacturer’s unique know-how, which is well-versed in how camera settings affect image quality.

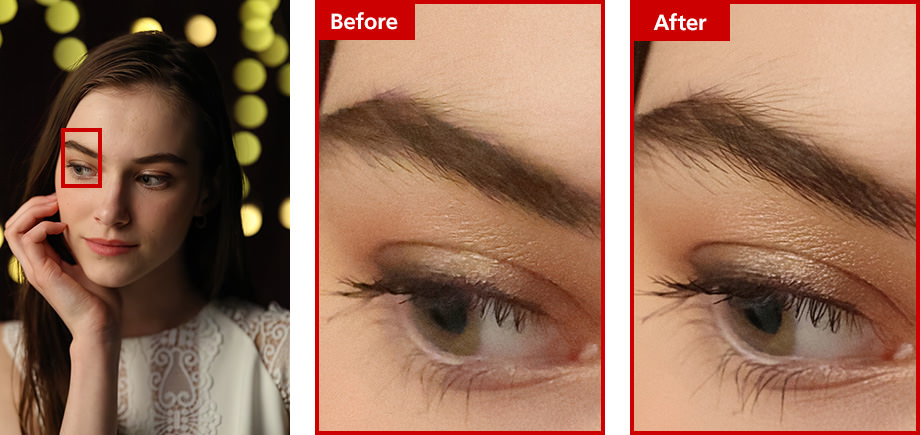

Noise reduction that leaves details and removes only noise

Numerous noise reduction functions have been developed in the past, but when noise is removed, even the details of the subject are lost, and in most cases the quality of the image deteriorates. was an area in high demand. While there is no decisive method, Canon is able to obtain clear, high-definition images through deep learning, making full use of its strengths of being able to prepare large volumes of image data with little deterioration and difficult-to-process image data. We pursued noise reduction image processing that can be used.

However, deep learning is not a panacea. Depending on the shooting scene, there may be more than a few “miscorrections” that are partially worse than conventional image processing. In response to this high hurdle, Canon changed the structure of the neural network itself, reviewed the learning process, and devised learning data. I poured it in. As a result, we have established a “Neural network Noise Reduction” function that enables clear, high-quality images to be obtained. In high-sensitivity photography, it is now possible to amplify light information while simultaneously removing amplified noise and expressing smooth skin texture (skin tone), which was hindered by the occurrence of noise.

You will be able to express the texture of smooth skin even with high-sensitivity shooting.

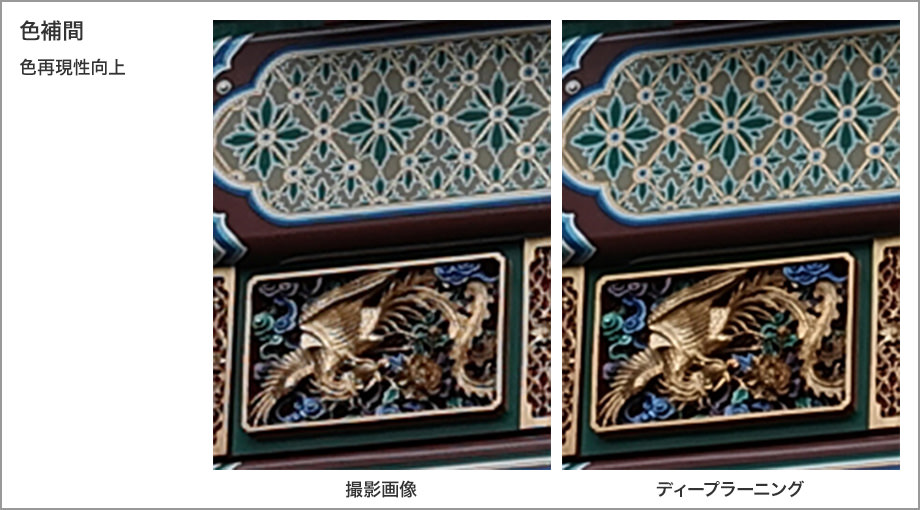

Color interpolation image processing that suppresses the occurrence of “moire” and “jaggies”

In a digital camera, each pixel in the image sensor is arranged in a regular pattern, so when you shoot stripes or checkered patterns, the regular patterns are superimposed, creating moire patterns that should not exist. There was a fundamental problem of doing so. In addition, each pixel of the image sensor detects only one of the three primary colors of light, red (R), green (G), and blue (B), and refers to information from surrounding pixels for the remaining two colors. RGB data for one pixel is generated by estimating the color of the pixel (this is called color interpolation processing). Due to this process, in principle, it was impossible to avoid the occurrence of “false colors (color moire)” that do not exist in the actual subject and “jaggies” in which oblique lines appear jagged. In the past, various processes have been developed to suppress such image degradation, but it was inevitable that resolution and color reproducibility would deteriorate due to the suppression of degradation.

Utilizing its extensive image database, Canon has established a deep learning image processing technology for color interpolation called “Neural network Demosaic.” The training dataset was constructed by taking into account the visual characteristics of human vision, which is sensitive to differences in brightness and less sensitive to changes in color. As a result, erroneous interpolation is suppressed by focusing on subjects that are difficult to guess in color interpolation processing. False colors on striped shirts, jaggies that often stand out in oblique lines, and moire and false colors that stand out in pet photos can be accurately interpolated to improve resolution and color reproducibility. became.

The improved color interpolation performance also improves the resolution of details and color reproducibility.

It strongly suppresses the false colors that occur when shooting striped shirts, and you can obtain images with higher resolution.

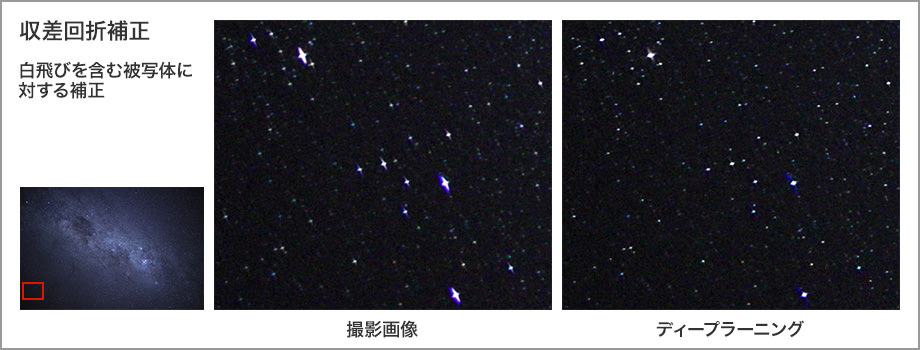

Aberration Diffraction Correction that even corrects the bokeh that is particularly conspicuous in “overexposed areas”

The lens that captures light into the camera is subject to inescapable problems , such as aberration *2 and diffraction blur *3 , due to optical theory. High-performance lenses have succeeded in minimizing aberrations by making full use of combinations of concave and convex lenses, aspherical lenses, and special materials, but in principle it is impossible to eliminate them completely. The Neural network Lens Optimizer, Canon’s deep-learning image processing technology for aberration diffraction correction, corrects these image degradations and can greatly improve the sense of resolution.

“What kind of aberration and diffraction bokeh are produced?” During the development and design stages, Canon has a thorough understanding of the aberrations and diffraction blurring that occurs in principle for each lens. Therefore, using simulation technology based on the design values for each lens, we created a large number of high-precision pairs of student images with aberrations and diffraction blur and teacher images without them. Using these as training data for deep learning, we succeeded in correcting various blurring that occurs in details when we want to clearly depict the periphery of an image, such as when shooting landscapes or astronomical objects. In addition, it is now possible to correct only the bokeh without increasing the noise that was amplified when correcting the bokeh.

- *2 Aberration: A phenomenon that occurs when an optical lens uses light refraction. “Spherical aberration” in which the focal point does not converge on a single point due to the spherical surface of the lens, “chromatic aberration” due to differences in the refractive index for each wavelength of light, and “coma” in which the peripheral points have a trailing shape like a comet. There are other aberrations, such as astigmatism and distortion, which cause image blur, distortion, and color shift due to misalignment of the imaging position.

- *3 Diffraction blur: A phenomenon in which light wraps around the shadow of an obstacle when passing through the edge of the obstacle. When shooting with a small aperture hole, light circulates and the sharpness and contrast of the image deteriorate.

By correcting the blur caused by aberration, the details of the subject are clearly depicted.

In addition, this aberration diffraction correction is also effective in correcting the bokeh that is noticeable in the overexposed parts of the subject, which was difficult to correct because the light was too bright and image information could not be obtained. However, simply preparing learning data that can learn how to correct the blurring of blown-out highlights can lead to unexpected correction errors. Furthermore, until now there have been almost no research publications on bokeh correction related to blown-out highlights, so it was necessary to discover new issues to be overcome and to elucidate the unknown principle. While working to improve the learning data by using CG, various devices and trial and error were applied to the structure of the neural network and image processing after execution, and high accuracy was achieved even for the bokeh that tends to stand out in blown-out highlights. Bokeh correction was finally realized.

Blur correction for overexposed areas greatly improves the correction effect for blur that tends to occur in the peripheral areas of starry sky images.

Color fringing that occurs in overexposed areas is also corrected with high precision.

Innovative correction effect by trinity of deep learning image processing

By combining the three areas of image correction realized by deep learning image processing technology, it is possible to perform a higher level of correction than by performing them individually, achieving a significant improvement in image quality such as the depiction of details and the three-dimensional effect of photographs. increase. For example, diffraction aberration correction corrects blurring so as not to increase noise, but if noise remains, the effect of aberration diffraction correction cannot be fully utilized in the details of the image. By properly combining noise reduction, the original performance of aberration diffraction correction will be maximized.

For that purpose, it is necessary to develop an algorithm that optimizes the combination and order of each technology, and the relevant departments such as camera development, lens design, image quality evaluation, and product implementation can physically cooperate closely. It is no exaggeration to say that this image processing correction technology was only possible with Canon.

Up until now, when shooting nightscapes, if you set the lens aperture to a small value to let in more light, aberrations will appear and resolution will drop. Conversely, if the aperture value is increased, the amount of light will decrease, and the sensitivity must be increased, resulting in noise. In addition, when taking a photo with a deep depth of field, which captures the landscape sharply from the foreground to the background, increasing the aperture value to deepen the depth of field causes diffraction blurring and reduces sharpness. However, when I tried to avoid that, I often fell into the dilemma of not being able to shoot as I wanted, such as not being able to deepen the depth of field and not being able to achieve the intended expression.

Canon’s deep learning image processing technology greatly reduces aberrations, noise, and moire, making it possible to perform image correction processing that was previously impossible, such as blur correction of subjects including blown-out highlights.

The number of scenes that can be shot with the ideal setting without lowering the ISO sensitivity for fear of deterioration in image quality has increased, and it has become possible to reproduce “scenes as they are” down to the smallest detail. It spreads widely.

Canon will continue to advance its image processing technology in pursuit of a shooting experience that makes users feel happy.

Appropriately combining the three areas of image correction produces a synergistic effect that greatly reduces aberrations and noise, enabling unprecedented photographic expression.